Chapter 5 From registration to re-identification: Exploring the interplay of data matching software in routine identification practices

Abstract

In migration management and border control, identifying individuals across data infrastructures frequently demands intricate processes involving integrating and aligning diverse data across various organizations, temporal contexts, and geographical boundaries. While existing literature predominantly focuses on first registration and identification, this chapter takes a novel empirical route by investigating the “re-identification” of applicants in bureaucratic processes within the Netherlands’ Immigration and Naturalization Service (IND). Re-identification is conceptualized as the continuous utilization and interconnection of data from various sources to ascertain whether multiple sets of identity data correspond to a singular real-world individual. Through the lens of re-identification, the research examines the iterative processes of identifying applicants across various stages of bureaucratic processes, drawing from fieldwork and interviews conducted at the IND and its data matching software provider. The study delves into the IND’s designed infrastructure for applicant re-identification, particularly the tools for searching and matching identity data. By contrasting design and practical use, the research uncovers multiple forms of data friction that may hinder re-identification. Furthermore, exploring the costs stemming from failed re-identification, manifested through duplicate records and the labor-intensive deduplication process, highlights the evolving bureaucratic re-identification practices and their links with transnational security infrastructures. The findings contribute to debates about the materiality and performativity of identification in two ways. Firstly, they redirect attention from first registration and identification to encompass re-identification practices across data infrastructures. Through an interpretative framework developed in the analysis, re-identification is further demonstrated not as a singular process but as a range of iterative practices. Secondly, the findings underscore that while integrating data matching tools for re-identification alleviates data friction, it inadvertently also comes with certain costs. This integration involves a redistribution of re-identification competencies and labor between the IND and the commercialized data matching engine and potentially shifting the burden of costs related to failed re-identification to different parts of the bureaucratic system.

- Contribution to research objectives

- To examine the relationship between identity data matching technologies and routine identification practices.

- This chapter provides an in-depth analysis of the interconnections between identity data matching technologies and routine identification practices, focusing on the operational intricacies of re-identification within migration management, such as in the context of residency or naturalization applications. Through empirical investigation, the chapter explores the utilization and interconnection of data from various sources to ascertain whether multiple database records correspond to individual applicants. By comparing the design of data matching tools in contrast to their real-world implementation, the comparison unveils multiple forms of data friction that can impede the IND’s re-identification processes. One form of friction arises from variations in the precision and accuracy of identity data during its transformation across different mediums, which subsequently influences the formulation of search queries. Furthermore, data friction can arise from the opaque calculation of match results by the tools, making it challenging for IND staff to understand search results, leading to the need for refining search parameters and strategies. The investigation highlights the possible costs of these forms of friction by examining the consequences of unsuccessful re-identification, exemplified by duplicate records.

- Infrastructural inversion strategy used

- Second Inversion Strategy — Data Practices

- This chapter uses the second infrastructural inversion strategy to examine the practices related to identity matching and linking across data infrastructures. Using this strategy, the chapter’s findings highlight a significant diversity in re-identification practices within the IND. This diversity emerges in two main aspects. On the one hand, re-identification practices are characterized by the information available to staff during the process. On the other hand, the precision criteria necessary for successful re-identification exhibit significant variation. Building on these observations, the chapter develops an interpretative framework that categorizes re-identification practices based on the demands of interpreting search inputs and results. The resulting matrix recognizes re-identification not as a solitary process but as a range of iterative practices. These practices encompass a variety of scenarios, including direct applicant interactions, staff managing phone conversations, handling application forms sent via postal services, and automated re-identification processes.

- Contribution to research questions

- RQ2: How do organizations that collect information about people-on-the-move search and match for identity data in their systems? How is data about people-on-the-move matched and linked across different agencies and organizations?

- This chapter addresses RQ2 by analyzing the various re-identification practices that encompass a range of scenarios reflecting diverse re-identification practices within the IND, the broader collaborative framework of the Netherlands’ migration chain, and even extending to transnational data infrastructures. Internally within the IND, these scenarios encapsulate various contexts, from direct applicant interactions to staff managing telephone conversations and processing physical application forms. Across the broader migration chain, the utilization of the v-number identification numbers emerges as one element to match and link data across systems. However, the study also uncovers instances where re-identification efforts falter due to, for instance, inconsistencies in identification practices across the migration chain partners. Furthermore, the analysis of the deduplication process unveiled a connection to transnational systems, as the IND and migration chain partners harness data from prominent European Union information systems to forge connections among seemingly disparate records within their respective databases.

Figure 5.1: The axes pertaining to the methodological framework in relation to chapter 5.

- Contribution to the main research question

- How are practices and technologies for matching identity data in migration management and border control shaping and shaped by transnational commercialized security infrastructures?

- This chapter addresses the main research question by investigating the interplay between the re-identification practices of the IND and a commercially developed data matching system. This integration of proprietary tools, designed by a private entity, for data matching not only signifies a transformation in the IND’s re-identification approach but also underscores the redistribution of re-identification expertise and competencies as these are embedded within a proprietary data matching system. Furthermore, the chapter highlights a link between the deduplication process and transnational systems. The IND and migration chain partners effectively employ data from prominent European Union information systems, forging connections among apparently disparate records within their databases. Moreover, the findings demonstrated challenges faced by vendors in designing custom-made solutions versus standardized approaches for deduplication. The complexities of defining identity and duplicate records, inherently linked to the specific organizational context, became apparent during an upgrade of automated duplicate detection tools. These findings emphasize that re-identification processes and associated technologies are far from isolated; they are intricately entwined within broader commercialized security infrastructures.

5.1 Introduction

The stakes in identification can be high, and authorities’ use of specialized technologies to search and match identity data can significantly mediate uncertain identification outcomes. An often invoked real-life example of the complexities of identification is when one of the “Boston bombers,” Kyrgyz-American Тамeрла́н Царна́ев, was not pulled aside for questioning when leaving from and returning to JFK Airport in New York for a trip to Dagestan in the Northern Caucasus in 2012 (an area considered as a high-risk travel destination by the US government).30 In April 2013, he and his brother carried out a terrorist attack during the annual Boston Marathon. According to an investigative report for the United States House Committee on Homeland Security, which media outlets reviewed, he was mistakenly not identified as a person of interest nor questioned (Schmitt and Schmidt 2013; Winter 2014). In 2011, Russian authorities had already informed their American counterparts of his ties to terrorist organizations. Following this information exchange, the US government added him to various watch lists and databases, including the Terrorist Identities Datamart Environment, which contains information on over 1.5 million people who are either known or suspected to be international terrorists. Such watchlisting systems automatically compare data about individuals, alerting and instructing authorities on what to do when they encounter someone whose data matches a watchlist entry. Due to missing information regarding Mr Царна́ев’s date of birth and variations in the transliteration of his name, “Tsarnaev” — “Tsarnayev,” the system did not raise an alert in his case.

The case of the Boston bomber exemplifies at least three essential features of modern identification practices mediated by digital technologies: inherent difficulties, technological solutionism and flexibility in the application of regulation. First, at all levels of bureaucracy, from street-level bureaucrats to system-level bureaucracies, there are inherent difficulties in accurately identifying and confirming identities. Most organizations must cope with databases containing incomplete, not current, incorrect data — or even duplicate entries referring to the same real-world persons (Keulen 2012). Such difficulties undermine the possibility of building trust. For example, the European Union Agency for Fundamental Rights (FRA)’s investigation on the implications of data quality in EU information systems for migration and border control on fundamental rights (2018) has reported that “authorities often suspect identity fraud when cases of data quality are the real reason for concern” (p. 81). Hence, ensuring that data are correct, complete, and accurate and that they can be shared, used, and processed by different parties and information systems is deemed vital for the functioning of (bureaucratic) procedures.31

Within the context of identifying individuals in migration management and border control scenarios, inherent difficulties can be explained by the need to interconnect and harmonize diverse datasets spread across different organizations, timeframes, and geographical boundaries. For alphanumeric personal data (such as surname, date of birth, and nationality), these data quality issues can have various, often unspectacular, reasons. The case of Тамерла́н Царна́ев touches on the fact that watchlist databases need Latin characters’ names, yet, transliteration of a name can take many forms. Hence, working with different data sources usually brings challenges related to what I will term “re-identification.” With this term, I intend to encompass a spectrum of iterative identification processes where data, whether sourced from within or across organizations and collected across diverse temporal and spatial contexts, are employed and interconnected to determine if multiple database records correspond to a single real-world individual.32 Instances of such re-identification encompass diverse scenarios, ranging from cross-referencing an individual’s passport details to access their visa records, correlating flight information to identify matches on watchlists, or linking migration and law enforcement databases to unveil potential suspect identities.

Second, new technologies keep being introduced in an attempt to solve “data friction” (Edwards 2010), and re-identification should be perceived not only as being disrupted by data friction but also as a means through which technology can introduce friction. Research should consider how technologies for searching and matching identity data reconfigure re-identification practices. This point draws on materialist and performativity debate on identification (e.g., Fors-Owczynik and van der Ploeg 2015; Leese 2022; Pelizza 2021; van der Ploeg 1999; Pollozek and Passoth 2019; Skinner 2018). Following these debates, identification should not be understood as a problem of truthful representation between people and their identity data but of how data infrastructures for identity management and identification “enact” individuals as migrants, criminals, risky travelers. From this perspective, we can rethink the above quote from the Fundamental Rights Agency. Instead of asking if doubts about someone’s identity arise from inaccurate data or mistrustful border control practices, we must also consider how re-identification enacts subjects as potential identity frauds. For example, a case of potential identity fraud could be discovered by automatically matching similar biographical data. Such a materialist and performative approach can replace discussions of identity as faithful representation with accounts about how re-identification introduces novel forms of suspicion. So far, however, literature has focused on the materiality and performativity of first (and often biometric) identification and registration (e.g., biometric refugee registration), and there needs to be more discussion about how people are re-identified and enacted throughout bureaucratic practices and data infrastructures.

Third, the literature on street-level bureaucracy emphasizes that public employees put government policies into practice through their regular interactions with citizens to deal with complex situations that do not always fit neatly into the rules and regulations made by legislators (e.g., Lipsky 2010). Re-identification can be ambiguous, and there is often a lack of clear guidelines; as a result, there is considerable room for discretion. However, the problem of re-identifying applicants during bureaucratic procedures has received little attention in the literature to date. A helpful example of an identification encounter where the tension between systems, policies, and local circumstances is apparent is provided by Pelizza (2021). She describes the back-and-forth between an applicant, a police officer, and a translator to convert the applicant’s name from Arabic to Latin characters during first registration at a Greek border. The name that emerges from this identification encounter is, in Pelizza’s words, the result of a “chain of translations” of the migrant’s name from oral to written to finally end up in the information system to serve as the official version to be used to re-identify this person in future administrative procedures. The process Pelizza described is very different from an example I encountered in The Netherlands. In this case, when there are doubts or refusal to give a name, a person will be assigned a label that serves as a name and includes details about the applicant’s sex as well as the time and place of registration (e.g., “NN regioncode sex yymmdd hhmm”). In both examples, public servants re-identify people by tailoring their actions to the individual involved, all within the constraints and affordances of a given sociotechnical setting.

However, while the street-level bureaucracy literature has long debated the constraining or enabling effects of new technologies, such as those related to automated decision-making (Bovens and Zouridis 2002; Buffat 2015), it has been less specific about the entangled technologies. Nonetheless, it is clear that the expectations and materialities of data shape identification encounters. The designs and data models of technical solutions, such as those used to search for a person’s record or to determine whether two identity data records refer to the same person, embed many assumptions about those data (see also Pelizza and Van Rossem 2023; Van Rossem and Pelizza 2022), which shape bureaucratic re-identification. In the case of identity data, such tools assemble knowledge and enact equivalences between otherwise disparate naming practices. For example, the male and female family name forms might each have a slightly different final syllable, but they could still be considered equivalent. By examining how applicants of bureaucratic procedures are re-identified, this chapter intends to answer RQ2:

How do organizations that collect information about people-on-the-move search and match for identity data in their systems? How is data about people-on-the-move matched and linked across different agencies and organizations?

The research seeks to answer these questions by empirically studying re-identification at the migration and naturalization service in The Netherlands (IND). The analysis draws on data gathered through fieldwork — interviews, documents, field notes — at the data matching software supplier and the IND agency itself. The research hypothesized that the design of search and matching tools incorporates assumptions about databases and their data records, which influence and are influenced by bureaucratic re-identification practices. Assumptions like these include the possibility of incompatible naming practices and conventions, meaning databases could never be entirely accurate. Therefore, I formulated the hypothesis that utilizing data matching software could result in a redistribution of responsibilities and capabilities driven by the inherent affordances and limitations of the software itself. Specifically, I anticipated that government agents would rely less on their identification expertise and instead rely more on automated matching algorithms to retrieve identity information.

The chapter aims to contribute to the literature on the materiality and performativity of identification, particularly within the intersection of science and technology studies (STS) and critical migration, security, and border studies. The findings of this chapter can contribute to these scholarships in two ways. Firstly, while prior investigations have predominantly concentrated on initial registration, often involving biometric data, this chapter’s findings illuminate the often-overlooked processes of re-identification that transpire throughout bureaucratic procedures. The chapter sheds light on lesser-known practices of dealing with uncertain alphanumeric biographic data in migration management. Secondly, by examining routine re-identification interactions embedded within specific sociotechnical contexts, the findings demonstrate how incorporating data matching tools, intending to curb data friction, sometimes shifts the costs associated with managing ambiguous data to other actors or entities within bureaucratic systems.

The next section of this chapter will commence with a review of the background and related work that has been instrumental in conceptualizing re-identification as a bureaucratic practice, emphasizing its intersections with the materiality and performativity of identification processes. Following this, an overview of the case and methodology adopted for examining matching systems and applicant re-identification at the Netherlands’ Immigration and Naturalization Service (IND) will be presented. The chapter’s empirical case and findings will be presented across three sections. Two empirical sections will juxtapose the designed applicant identification infrastructure and its practical implementation at the IND, recognizing three forms of data friction that can impede the re-identification process. The third empirical section will delve into the costs of unsuccessful re-identification, focusing on the problems related to duplicate records and the labor-intensive deduplication process. Finally, the chapter will answer the chapter’s research question by synthesizing the diverse re-identification practices encountered throughout the empirical sections into an interpretative framework, highlighting a range of re-identification scenarios.

5.2 Conceptualizing re-identification: Bureaucratic contexts and the dynamics of identity data

Many interactions between migrants and public authorities involve forms of identification to establish or verify applicants’ identity in different steps of bureaucratic processes relating to granting asylum, issuing residency permits, naturalization, and so forth. As the literature on street-level bureaucracy has shown, public-service workers in charge do not just carry out relevant policies; they are also actively involved in interpretative work through the discretion workers use (e.g., Lipsky 2010; Collins 2016). What does it mean to regard re-identification practices as part of routine bureaucratic procedures?

5.2.1 Re-identification as a bureaucratic practice

Michael Lipsky’s book “Street-level bureaucracy: dilemmas of the individual in public services” (published in 1980) is widely credited with popularizing the concepts of street-level bureaucracy and discretion. According to this widely held view, diverse frontline public service workers influence public policy through regular interactions with the general public. As Lipsky (2010) states: “street-level bureaucrats have considerable discretion in determining the nature, amount, and quality of benefits and sanctions provided by their agencies” (13). For instance, a border patrol agent may have discretionary authority to grant entry to a traveler based on the results of an identification encounter with the traveler at passport control. Lipsky’s original argument, however, required updating in light of the increased use of digital technologies in government and the rise of e-government. Essentially, the digitalization of government agencies has compelled scholars to reconsider the role of street-level bureaucrats and their daily interactions (Bovens and Zouridis 2002; Buffat 2015; Busch and Henriksen 2018; Snellen 2002). With digitization, discretionary power is sensibly reduced as decisions are delegated to automated systems. This argument has implications for identification, which can occur not only in direct interactions between applicants and bureaucratic officers but also increasingly in automated processes mediated by digital tools.

The transformations brought about by information and communication technologies were conceptualized by Bovens and Zouridis (2002) in an influential article as occurring first at the “screen-level” and then at the “system-level” of bureaucracy. “Screen-level bureaucracies” refers to how interactions between officials and citizens have become increasingly mediated through computer screens. For instance, the personal data of a residency permit applicant is filled out using electronic template forms in a case management system. Alternatively, increasingly applicants themselves are also provided access to government information systems (Landsbergen 2004). Meanwhile, decision trees, business rules, and algorithms that model the policies and regulations will guide the decision to grant the permit in this example. “System-level bureaucracies” refers to an even higher level of automation and digitization when collecting data and carrying out routine tasks. The following is the author’s idealized description of the practitioners’ new roles in such an organization:

The members of the organization are no longer involved in handling individual cases, but direct their focus toward system development and maintenance, toward optimizing information processes, and toward creating links between systems in various organizations. Contacts with customers are important, but these almost all concern assistance and information provided by help desk staff. After all, the transactions have all been fully automated. (Bovens and Zouridis 2002, 178–79)

Within the bureaucratic processes, individuals applying for asylum may often find themselves subject to iterative identification procedures that traverse the realms of street-level, screen-level, and system-level bureaucracies. It typically commences at the street and screen levels, where front-line bureaucrats serve as the initial point of contact. These bureaucrats collect and input applicant information, funneling it into the complex interfaces of the bureaucratic systems. Subsequently, further decisions regarding data management, including updates, linkages, and corrections to applicant information, may be made at the system-level. This iterative process through bureaucratic layers underscores the importance of consistent and precise identification practices across all levels.

The concept of “re-identification” highlights the entanglement of street-level procedures and the crucial role in automated systems within system-level bureaucracies responsible for processing applications from individuals seeking services or assistance. Automated processes must correctly re-identify the distinct applicants of bureaucratic processes to make the right decisions. System development will thus be required to automatically re-identify individual cases and to ensure that data are accurate and up to date, that no duplicate entries exist, and so on. Moreover, as applicants themselves are provided access to government information systems, such as through the filling out of digital application forms, they can be assumed to become more involved in the re-identification process. This change brings an additional layer of complexity, as the accuracy and consistency of the data provided by the applicants also contribute to the success of re-identification processes. In a system-level bureaucracy, re-identification will be linked to verifying and connecting individual records across systems and organizations. [maybe this is the place to defi ne re-identification]

In the literature about the entanglement of street-level, screen-level, and system-level bureaucracies, the desirability of automation for fairness and efficiency is usually weighed against its potential negative impact on human judgement and autonomy. Buffat (2015) categorizes these debates into the “curtailment thesis” and “enablement thesis.” The former argues that information and communication technology (ICT) limits frontline officers’ discretion, transferring it to other actors. The “enablement thesis,” on the other hand, suggests that technologies play a more nuanced role by shaping interactions between technologies, workers, and citizens. A more recent perspective, the “digital discretion” literature, proposes the use of “computerized routines and analyses to influence or replace human judgement” (Busch and Henriksen 2018, 4) to adhere to policies and ensure fair and consistent outcomes. This chapter takes a further distinct STS-influenced approach, emphasizing how re-identification is intertwined with technology’s affordances and constraints that shape bureaucratic realities. Such an STS lens prompts us to be specific about the use of technologies, such as how the design of data matching systems, their embedded algorithms, and their interfaces affect the daily routines of those involved in re-identification processes and ultimately shape the re-identification outcomes.

The literature suggests that when analyzing the interplay between re-identification, discretion, and varying levels of bureaucracy, two key elements should be taken into account. Firstly, the literature suggests that identification policies are executed by public workers in their daily routines, often influenced by their discretionary powers. Secondly, it emphasizes the need to view routine identification practices within bureaucratic frameworks in the context of broader changes in their sociotechnical systems. The concept of re-identification can help make sense of the interactions between bureaucratic organizations and applicants when these interactions combine street-level interactions, screen-level processes, and system-level bureaucracies. When there are uncertainties regarding precise identification, the discretionary components of procedures can become more important. In such scenarios, the interplay between human judgement and automated mechanisms could enhance or impede re-identification. It also raises questions about potential challenges associated with unsuccessful re-identification attempts, including subsequent consequences and necessary corrective measures.

Re-identification, as introduced here, is a concept that can offer insight into the entanglement between street-level, screen-level, and system-level bureaucracies. In the realm of bureaucratic processes, data and information frequently traverse these distinct levels, presenting both challenges and opportunities for the handling of applicant data. This concept of re-identification aims to untangle the complexities that arise when individuals engage with government systems and personnel, necessitating multiple rounds of identification and verification across bureaucratic contexts. By emphasizing the iterative character of identification, re-identification highlights the recurrent need for verifying individuals’ identities. Furthermore, it underscores the pivotal role of technology, interfaces, and organizational structures in shaping identification processes within bureaucratic systems.

5.2.2 Materiality and performativity of re-identification

A different body of literature further recognizes the importance of identification as intermingled with the government’s obligations and rights (e.g., citizenship, residency), as well as coercive measures (About, Brown, and Lonergan 2013a; Caplan and Torpey 2001). As recalled in Chapter 2, scholars have conventionally placed a significant emphasis on the interconnection between the formation of modern nation-states and the development of registration and identification systems, such as the creation of civil registers or passport documents (Breckenridge and Szreter 2012; Caplan and Torpey 2001; Torpey 2018). An often preferred term for the state’s capacity to identify its citizens is the notion of legibility of Scott (1998). Scott noted how the increased interaction of states and their population (e.g., for purposes of taxation) went hand in hand with projects of standardization and legibility as attempts to identify its people unambiguously. So, in the example by Scott, while cultural naming practices are very diverse and can serve local purposes, the standardization of surnames “was a first and crucial step toward making individual citizens officially legible” (p. 71). In these practices, the identity of the person is not a problem of representation between a person and information captured about them but one of reducing multiplicity while mutually enacting subjects, states, and institutions (Lyon 2009; Pelizza 2021). What needs to be clarified is how such concept of legibility and reducing multiplicity also intersects with the notion of re-identification, as the state’s ongoing endeavor to ensure legibility involves not only initial identification but also successive processes of verifying and connecting data over time and across various contexts.

The growing body of literature at the intersection between STS and Critical Security Studies has added an important dimension to the discussion on identification by accounting for the materiality and performativity of devices and practices (Cole 2001; Gargiulo 2017; Skinner 2018; Pelizza 2021; Suchman, Follis, and Weber 2017). Bellanova and Glouftsios (2022), for instance, have studied the actors and practices involved in maintaining the EU Schengen Information System (SIS). The SIS system allows authorities to create and consult alerts on, among others, missing persons and on persons related to criminal offences. By looking at how these alerts “acquire the status of allegedly credible and accurate information that becomes available to end-users through the SIS II” (p. 2) they make evident its role in conditioning international mobility. Fors-Owczynik and van der Ploeg (2015) have shown how three systems in the Netherlands translate and frame risk categories to identify potentially risky migrants and travelers. Building on this literature, re-identification can be understood as intricately connected with the materiality and performativity of devices, shaping the evaluation of data, individuals, and organizations as accurate and trustworthy. Drawing on findings from the politics of mobility literature (Cresswell 2010; Pallitro and Heyman 2008; Salter 2013), such as observations regarding the expedited processing of certain passenger classes through trusted traveler programs at airports, these disparities can lead to divergent outcomes. In cases where discrepancies arise, individuals may be subject to heightened scrutiny and additional security measures. Conversely, consistent information across systems has the potential to expedite their passage through border controls.

Surprisingly, despite the significance of re-identification in contemporary bureaucratic practices, there remains a noticeable gap in our understanding of how practitioners navigate the complexities arising from ambiguities in personal identity data during re-identification. A case in point is highlighted in a report by the European Court of Auditors, which outlines that “when border guards check a name in SIS II [the Schengen Information System], they may receive hundreds of results (mostly false positives), which they are legally required to check manually” (ECA 2020, 31). This operational challenge, rooted in the technology’s approach to computing and presenting matching data, exemplifies how the concept of re-identification intersects with the practical realities of border control. The abundance of false positives generated by the system raises questions about how re-identification encounters are negotiated when dealing with such ambiguities and how technologies might influence these interactions.

5.2.3 Conceptualizing data friction in re-identification

Critical data studies have made it clear that data are never “raw” (Gitelman 2013) and “contain traces of their own local production” (Loukissas 2019, 67), and that work is therefore needed to put data to use. For example, a European Court of Auditors report mentions that a prominent EU information system supporting border control contains millions of potential data quality issues, such as first names recorded as surnames or missing dates of birth (ECA 2020). Many such discrepancies are likely related to work practices and issues of fitting local circumstances to global standards (Bowker and Star 1999). As Loukissas (2019) remarks, databases might contain various errors and “local knowledge [is needed] to see that such errors are not random” (p. 67). In this sense, data serve as evidence of the local conditions of their production, which, for future re-identification processes, must be linked across space and time. If data quality problems and uncertainty are facts of life (Keulen 2012), then bureaucratic organizations must cope with this uncertainty in re-identification practices.

As highlighted in Chapter 1, multiple technical mechanisms exist for dealing with such uncertainties, such as determining whether two or more data records pertain to the same real-world individual (Batini and Scannapieco 2016). Data matching techniques will compare attributes of data records and use classification methods to determine matches (Christen 2012). There are numerous classification techniques to determine matches: some are based on adhering to specific rules, while others take a more probabilistic approach. Metrics can, for example, calculate the similarity of two sequences of characters based on the number of operations required to transform one into the other. In this way, the names “Sam” and “Pam” may be considered closely related (for instance, was it a typo?). Other approaches may even calculate such similarities by comparing how names are pronounced (in English). When matching personal data, rules-based matching may include ignoring honorifics and titles (e.g., Mr., Ms., Dr.). Although these technical mechanisms for data matching are widely recognized, their practical implications in the processes of re-identification remain less evident.

The insights gleaned from Critical Data Studies indicate that investigating technical data matching mechanisms not only reveals local conditions of data productions and operational dilemmas but also offers valuable insights into re-identification. This is exemplified by the specific case of data matching aimed at discovering and resolving duplicate data records. For instance, a migrant might inadvertently be registered multiple times in a database due to technical glitches. Typically, a deduplication process (for example, as detailed in Batini and Scannapieco 2016) periodically compares each record with all others in the database to identify records pertaining to the same individual. A domain expert usually intervenes to make decisions regarding whether these matches do indeed pertain to the same individual to consolidate the multiple data into a single one.

Following Loukissas (2019), the process of “normalizing” duplicates can be “a key to learning about the heterogeneity of data infrastructures” (p. 60). Loukissas gives the example of software that identifies digital copies of books, newspapers, and objects in a digital library collection. He challenges the software’s intention to eliminate these copies, suggesting that delving into the duplicates’ origins could be more instructive. This discussion on duplicates holds relevance for re-identification in two ways. Firstly, deduplication can offer similar insights into multiple re-identification practices. Secondly, the presence of duplicates prompts another question: what are the implications for applicants and organizations of data matching failures and unsuccessful re-identification?

The complexities arising from impediments in the seamless flow of identity data may indeed be at the heart of unsuccessful data matching and re-identification processes, which can be aptly conceptualized as manifestations of “data friction” (Edwards 2010). Data friction, according to Edwards (p. 84), “refers to the costs in time, energy, and attention required simply to collect, check, store, move, receive, and access data.” Data friction signifies the barriers that disrupt the smooth flow of data across different actors, organizations, and material forms. As noted by Bates (2017), data friction is “influenced by a variety of infrastructural, sociocultural and regulatory factors interrelated with the broader political economic context,” all of which influence the movement or hindrance of data. Pelizza (2016b) explains the process of addressing data friction as a dynamic interplay between aligning and replacing infrastructural elements that facilitate data movement, where changes in one aspect impact the other. In her study of the Dutch land registry, Pelizza (2016b) portrays data friction as conflicts revolving around finding the best configurations of actors, institutions, and resources to ensure dependable data. As she emphasizes, even in complex systems designed to mitigate friction, complete removal is often unattainable; instead, the associated costs tend to shift to alternative actors, organizations, or material forms. Consequently, we may hypothesize that data frictions concerning re-identification present associated costs, such as organizational labor, interpretive activities, task complexification.

Identity data takes on diverse forms as it navigates through different actors and organizations, and these transformations may entail associated costs when utilized for re-identification purposes. I propose that the concept of data friction can be extended to the movement of identity data across organizations and different material forms. For instance, as identity data transitions from a physical passport to a digital database record or moves between the systems of different organizations, barriers may emerge, leading to friction in the smooth movement of identity data and, consequently, re-identification. Regulatory constraints undoubtedly influence the movement of identity data between organizations. However, discrepancies may also arise due to variations in naming conventions, differences in date of birth formats, or inconsistencies in the use of characters like hyphens or spaces in surnames across organizations. To illustrate, let us consider my experience while applying for a Russian visa in 2018: my passport information was copied into various systems, leading to an error where my second name was mistaken for a patronymic in the application. Moreover, my first name was inadvertently listed with the letter “v” instead of “w” in the machine-readable zone of the visa due to the absence of the letter “w” in Cyrillic. These confusions stemmed from differences in naming conventions and ambiguities in the transliteration process. Even seemingly minor discrepancies like this can create complexities in the re-identification process.

Mechanisms aimed at mitigating data friction in the context of re-identification are likely to bring about shifts in associated costs. For instance, one hypothesis could suggest that the circulation of identity data and the presence of data friction is closely linked to the proliferation of duplicate records. Consider the scenario where various organizations share a common database: disparities in identifying individuals, like variations in naming conventions or data formats, could lead to registering multiple entries for the same individual. In this and similar cases, streamlining data frictions by integrating data matching tools into bureaucratic systems simultaneously redistributes costs. Integrating such tools, for example, might necessitate organizational adjustments, as staff may need to allocate additional resources to manage other aspects of re-identification, such as the labor-intensive task of detecting and resolving identity discrepancies.

This section has reviewed literature that has been instrumental in conceptualizing re-identification as an iterative bureaucratic practice, emphasizing its materiality and performativity dimensions. The conceptualization of re-identification as a bureaucratic practice underscores its significance within the interactions of bureaucratic organizations with applicants, particularly as these interactions become increasingly digitized and automated. As a bureaucratic practice, it underscores the potential links of re-identification with the exercise of discretion by bureaucrats in routine re-identification practices at the street-level and screen-level. Re-identification can be further contextualized at the system-level within the realm of the materiality and performativity of devices, ultimately influencing the evaluation of data, individuals, and organizations, shaping their credibility and reliability.

Additionally, delving into the technical mechanisms of data matching not only uncovers operational intricacies but can also serve as a means to gain insights into the diversity of re-identification processes. Integrating data matching tools within bureaucratic systems with the aim of reducing data friction can inadvertently shift the associated costs. As such, the literature discussed here supports the idea that the re-identification of individuals throughout bureaucratic processes and data infrastructures is a crucial but understudied area of research. An iterative approach to identification underscores that re-identification is not a one-off event but an ongoing, multifaceted process spanning diverse bureaucratic tiers. It encompasses street-level interactions, screen-level engagements, and system-level operations, unfolding across both spatial and temporal dimensions. This approach enables us to hypothesize that data friction is an inherent element within these iterative identification processes, potentially leading to less-than-optimal re-identification outcomes that entail organizational costs. The next section will describe the empirical case and the methods used to investigate re-identification.

5.3 Case and method: Empirical analysis of the interplay between data matching systems and applicant re-identification

The investigation into re-identification within migration management draws upon data collected through fieldwork conducted in person and remotely between July 2020 and July 2021. While a comprehensive methodological framework is outlined in Chapter 3, this section offers additional specific details tailored to the context of this chapter. Throughout this fieldwork, I established a collaborative partnership with the Dutch company WCC Group, specializing in developing data matching and deduplication software. In the context of this chapter, the focus will be on the software’s use by the Netherlands’ Immigration and Naturalization Service (IND). The IND, entrusted with responsibilities such as processing residency and nationality applications, utilizes the ELISE software for searching and matching applicants’ identity data within the back-office system and also assists in managing data anomalies, such as duplicate records.

The fieldwork delved into applicant re-identification procedures at the IND, specifically examining their interplay with WCC’s “ELISE ID platform.” By design, the matching system aims to circumvent errors in both the database and the search criteria. For instance, it can automatically accommodate instances where the date of birth is incomplete or date and month values have been inadvertently interchanged in the search query or database records. Using the ELISE system thus facilitates re-identification when discrepancies may arise from difficulties in matching personal data from different locales, scripts, and cultural contexts. The re-identification of an applicant through a search, based on factors like their name, nationality, and date of birth, results not in a simple roster of exact matches but rather a compilation of applicants with an associated value signifying the likelihood of a match between identity data records. In short, the data matching engine integrates diverse algorithms into a cohesive system that aims to address uncertainties in identity matching, thus supporting the IND’s operational processes.

Methodologically, the research considered discrepancies between tool design and practical usage. This was accomplished by comparing how different organizational actors within the IND employ data matching capabilities in their daily re-identification tasks. The study hypothesized that for re-identification to be effective, for IND staff to use the search and interpret the results effectively, there must be some alignment between the system and its users. The research placed a specific emphasis on understanding the challenges faced by IND staff while employing search and match functions in IND’s systems, revealing underlying assumptions and expectations of the data matching system. Indeed, discrepancies between intended use and actual application might underlie challenges in re-identification.

5.3.1 Data collection

The data collection process was facilitated by my involvement as a temporary member of WCC’s ID team. This collaboration enabled me to access essential technical documentation and engage in informative meetings, including some conducted on-site at the company’s headquarters in Utrecht, The Netherlands. The collected technical documents regarding integrating the ELISE data matching system into the IND systems can be classified into three categories. Firstly, some documents cover the ELISE system’s overarching technical specifications. Independent of any specific organizational implementation, these documents provided insights into the data matching software’s overall design and intended applications. Secondly, a trove of technical documents, meeting minutes, and presentations delved into the precise implementation of the ELISE system within the context of the IND. These resources helped analyze the search and match software integration into the IND’s systems. Thirdly, the collected documents encompassed public communications, such as online news aimed at ICT professionals and official reports like government audit findings. These sources contributed an additional layer of context regarding the evolution and establishment of the IND’s information system. The initial reading of these documents, accompanied by annotation and note-taking, served as a jumping-off point for structuring the questions to be asked during the interviews with IND staff.

Following the document analysis, I conducted semi-structured interviews to gain insight into the development and use of the search and match tools. As detailed in Chapter 3, interviewees can be divided into two main groups based on their themes and used different protocols for each group. The first group was centered on IND staff — the users, whose duties include looking up and matching identities in their databases, which necessitates using the ELISE ID platform. The analysis in this chapter mainly draws on data from interviews with IND staff and notes from the briefing meetings with WCC ID team members. The second group was WCC staff involved in the software’s development, deployment, and maintenance as designers. Although these interviews with WCC staff provided additional context about ELISE, they are not directly featured here and will be more important for Chapter 6. With the aid of the ID Team, initial contact with the IND was established, yet pandemic constraints necessitated interviews via online meetings or phone calls. In five interviews, each spanning roughly an hour, participants shared their experiences with the search and match tools at the IND.

The interview protocol for IND staff was designed to explore various aspects of re-identification within the IND organization. Interviews began with general inquiries about the interviewees’ roles within the IND, providing context for understanding their experiences and tailoring subsequent questions. The initial questions centered around three key factors influencing the searching and matching of applicant data. These factors are how search queries are formulated, the computation of matches, and the handling of search results. Regarding the first factor, questions included IND personnel’s approach to formulating search queries, including the data categories they input, their knowledge of data elements that yield better search results, and their utilization of match features such as wild cards. For the second factor, questions were tailored to uncover their expectations regarding the match results and their understanding of the match engine’s functionality. For the third factor, the questions probed on their processing of search results, addressing their perception of result quality, the ranking of matches, and the interpretation of match scores. Next to those questions, the protocol inquired about duplicates and the deduplication process, inquiring about the criteria used to identify duplicates and the organization’s approach to resolving them. Lastly, the protocol investigated the participants’ use of additional systems and data to support the re-identification process. Overall, the interview protocol aimed to provide comprehensive insight into the search and match procedures and the challenges IND personnel face in re-identifying applicants.

5.3.2 Data coding and analysis

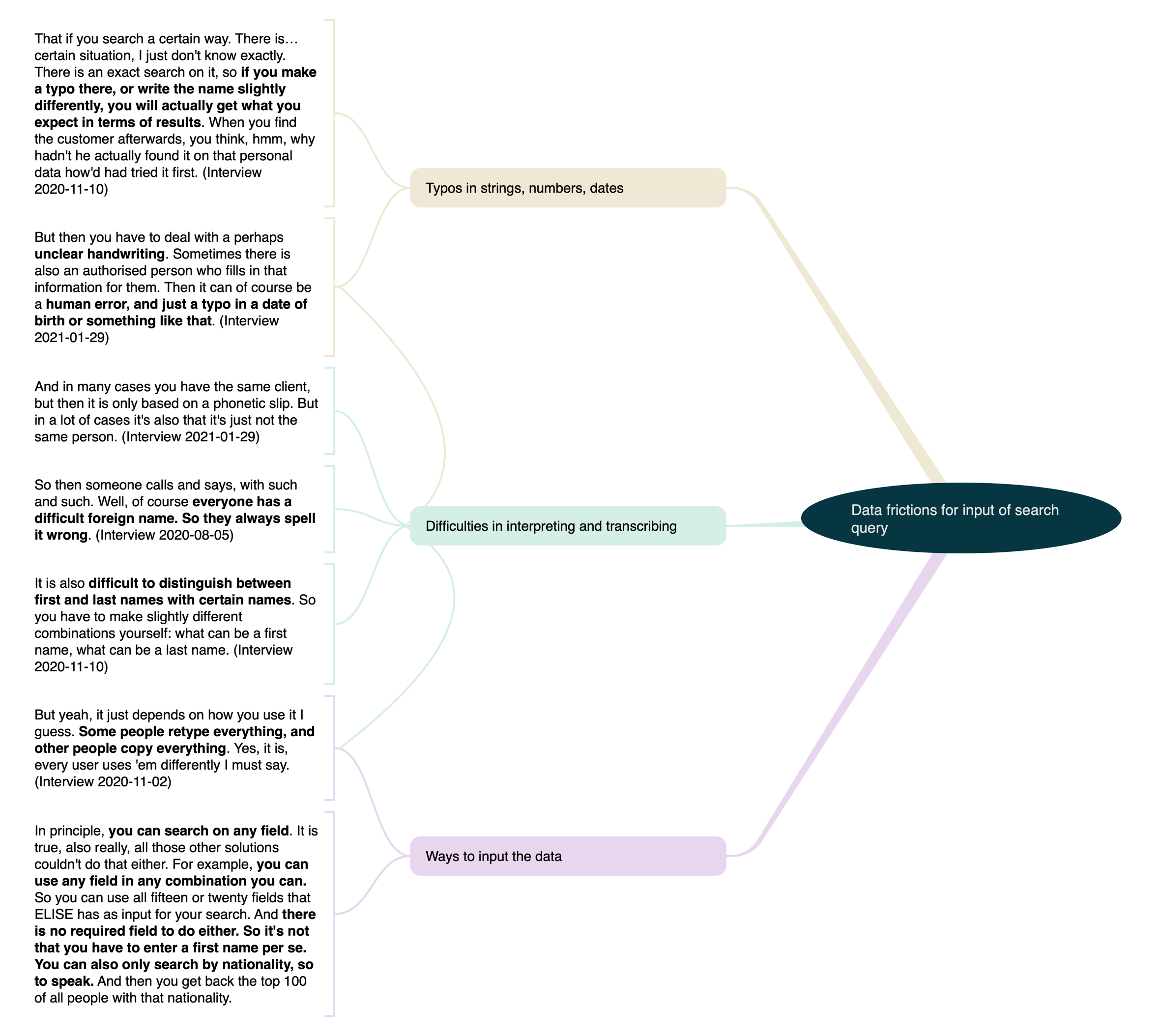

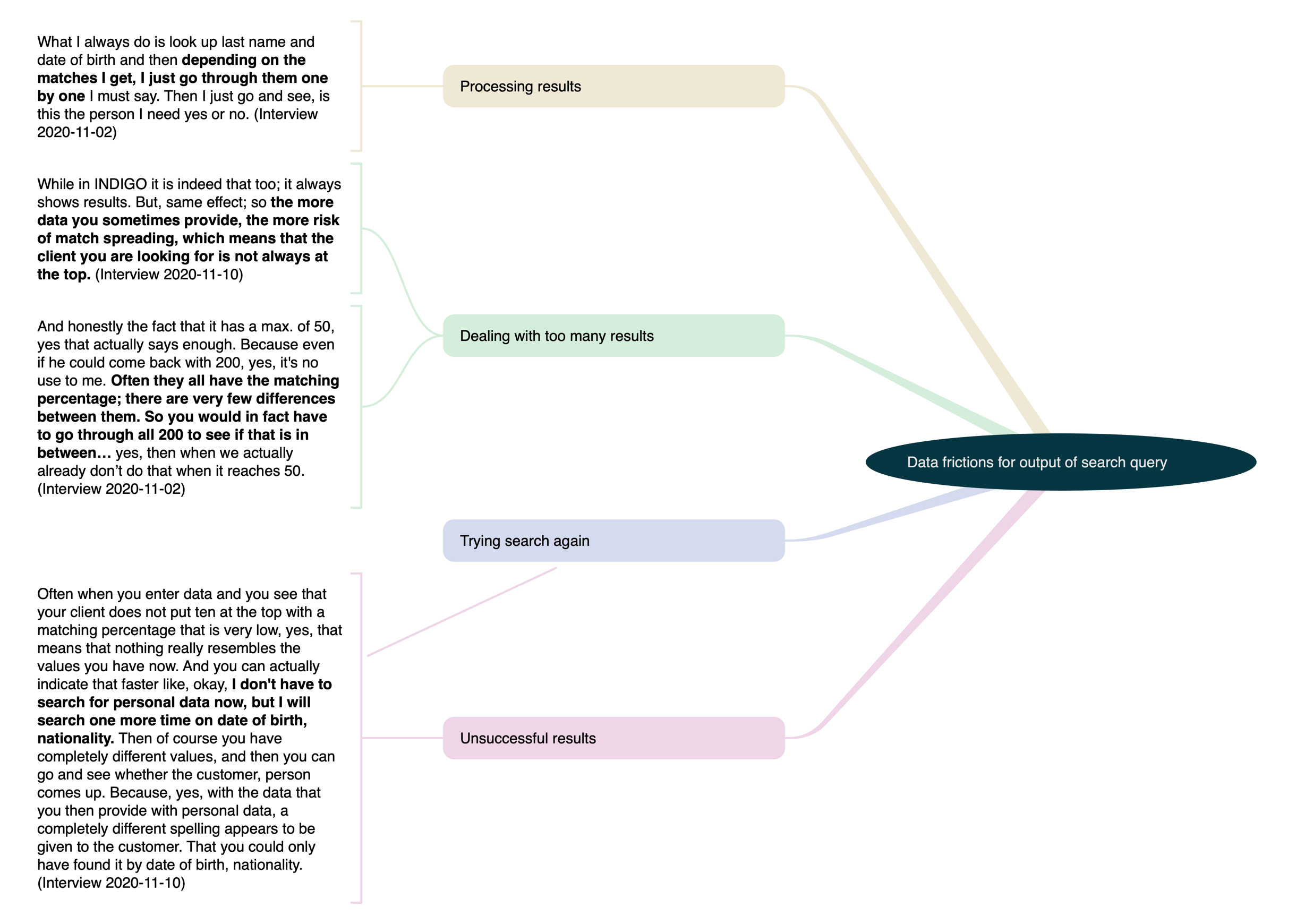

For analyzing the fieldwork data, I followed standard methods for coding and analyzing qualitative data. After collecting and preparing data from documents and interviews (including transcription), I coded and analyzed the data using the computer-assisted qualitative data analysis software ATLAS.ti. The data coding and analysis drew inspiration from the three interconnected steps of the “Noticing-Collecting-Thinking” (NCT) method by Friese (2014), which follows a standard qualitative data analysis but is tailored for the ATLAS.ti software. The Noticing phase involved both deductive codes and openness to inductive insights from the data. These codes were reviewed and organized into similar categories in the Collecting step. The third step, Thinking, led to identifying patterns, processes, and typologies among the developed codes. Figures 5.2 and 5.3 provide a simplified overview of this process, illustrating broad deductive themes on the right, more inductive findings in the middle, and representative interview excerpts on the left.

In more detail, the first step of the data coding process began with deductive coding, aligning with the key factors influencing the search and matching of applicant data, as outlined in the interview protocol. These factors encompassed the formulation of search queries, match computation, and handling of search results. The data coding utilized several of these predefined codes, falling under broad categories like “search query,” “search engine,” and “search results.” This coding method began by applying these predetermined codes to relevant excerpts. However, these codes were subsequently refined through an inductive approach, recognizing patterns within the interview excerpts.

The second step of refining and collecting codes proceeds through adding a colon “:” to the code and names to introduce inductive sub-codes. For instance, “search query: use of data: amount of data available” corresponds to a deductive category concerning the types of data employed in crafting search queries (“search query: use of data”). The inductive aspect (“available data”) emerged from the interviews and was consistently used for quotes referencing the quantity of data available concerning formulating search queries for re-identifying applicants.

Figure 5.2: This diagram shows how friction with search query input were found by analyzing interview data.

Figure 5.3: This diagram shows how friction with interpreting search results were found by analyzing interview data.

The third step of the process involved further discerning patterns, processes, and typologies within the developed codes. Two illustrative examples encompassed an in-depth analysis of friction associated with search query input and output, visualized in Figures 5.2 and 5.3. In the diagram illustrating search input, three main challenges were identified, each linked to underlying codes associated with challenges in constructing search inputs due to typographical errors in strings, numbers, and dates; complications in transcribing or interpreting data; and uncertainties about how data should be input (e.g., determining the extent of data to input and which combinations to use). Conversely, the figure illustrating search output captured different broad types of challenges, again connected to more specific underlying codes tied to processing results, including instances where the output yielded an excessive or insufficient number of results, as well as instances where the results were unexpected.

5.4 Exploring the designed infrastructure for identifying applicants

This section starts the empirical analysis by situating the re-identification of applicants33 at the Immigration and Naturalization Service (IND) within its software architecture and inter-organizational frameworks of the Netherlands’ migration policy. Understanding the organizational and software architecture helps situate the intricacies and challenges of the IND’s re-identification processes. The IND’s operations rely on its information systems called INDiGO, designed to manage the identification and registration of applicants applying for various purposes, including residency or naturalization. Additionally, INDiGO interfaces with various partners and stakeholders within the migration chain whose systems and databases also play a role in the IND’s processes. This initial examination of the organizational and software architecture will provide the foundation for the following sections, in which this architecture and software designs will be compared to their practical implementation.

5.4.1 Migration chain and identifying chain partners

The processes and practices involved in re-identifying applicants for the IND need to be understood within the larger context of the information infrastructure for handling foreigners in The Netherlands. The IND is just one link in the “migration chain” (migratieketen), a collaboration between various governmental and non-governmental organizations in The Netherlands. Each link in this chain, known as a “chain partner” (ketenpartner), is responsible for different processes foreign nationals in The Netherlands go through, including entering the country, obtaining a residence permit, naturalization, and departure or expulsion. These partners exhibit interdependence since their decisions often necessitate information from others, facilitated by an interconnected information infrastructure.

The information infrastructure of the migration chain can be traced back to a subfield of information science called “chain computerization” or keteninformatisering in Dutch, which has been influential in Dutch academia and government digitalization in The Netherlands (e.g., Grijpink 1997). Chain computerization pertains to the information infrastructure of the networked chain of interdependent organizations without a formal hierarchy (Oosterbaan 2012). These entities collaborate and exchange information to execute a shared process, exemplified in this context by the handling of foreign individuals within the Netherlands. The migration chain, as outlined in Zijderveld, Ridderhof, and Brattinga (2013) that describes its architectural framework, defines principles and objectives aimed at enhancing information exchange. It addresses identification challenges, particularly those contributing to duplicate registrations among chain partners, and constitutes an important focal part within the architecture.34

Foreigners within the migration chain are assigned a unique identifier known as the “v-number,” which is used for identifying individuals throughout the chain. This unique identifier is issued through the Basisvoorziening Vreemdelingen (BVV) system, functioning as a centralized repository for sharing and consulting information about foreign nationals among the various chain partners. Upon the first contact with a foreign national, the relevant organization must ensure that the individual has not been previously registered and, consequently, already possesses a v-number. The BVV database can be updated and enriched with identity data, travel information, identity documents, biometric characteristics, and status data, such as asylum application outcomes, originating from the chain partner’s systems. Even though every partner in the chain has their database and information regarding migrants, the use of BVV and the v-number can enable the linkage of information by utilizing the v-number as a shared and unique identifier (ICTU 2015).

The processes of first registration and identification of foreign nationals are further directed by the “Protocol identification and labeling” (PIL, “Protocol identificatie en labeling” in Dutch) The various chain partners use this protocol; it standardizes the process of identifying and registering foreign nationals as a way to ensure that “unique, unambiguous personal data of optimal quality are available in the migration chain” (Ministerie van Justitie en Veiligheid 2022, 9). As implied by its name, the protocol also includes a labeling provision. If someone is hesitant or unwilling to reveal their name, they will be assigned a label. This label will serve as their name and contain details about their gender and the date, time, and place of registration. For example, the label could be NN regioncode sex yymmdd hhmm. Therefore, the protocol can be interpreted as being designed to minimize identity multiplicity and streamline subsequent re-identification by providing clear guidelines for recording individuals’ data. Nonetheless, as elucidated later in this chapter, the presence of individuals possessing multiple v-numbers indicates that this ideal scenario may not always hold in practice.

The key takeaway from the architecture of the migration chain is that the unique identification of individuals is deemed crucial for re-identifying foreigners in The Netherlands among all the chain partners. Specifically, the IND, as one of the chain partners, relies on the BVV systems and v-number for effective identification and re-identification of applicants. Through this re-identification process, the IND is tasked with confirming whether an applicant has not already been initially registered by other chain partners such as the “Vreemdelingenpolitie” (national police) or the “Koninklijke Marechaussee” (national gendarmerie). Next, we will delve deeper into the systems employed by the IND and explore further their interactions with the BVV and other chain partners.

5.4.2 Unpacking the IND and INDiGO infrastructure

Shifting the focus from the broader discussion of the identification of foreign nationals in the migration chain, this section delves into a more specific examination of how applicants are identified in the information systems employed by the IND. The central pillar of this information infrastructure for application and identity management is the INDiGO system.35 The implementation of the INDiGO was part of a more extensive digitization project and data transfer from a previous system called INDIS. The distinct manner in which the upgraded system technically compartmentalizes various facets of organizational operations is of particular significance. This division is primarily manifested in the separation of policy implementation, which involves the application of business rules aligned with the Dutch Aliens Act, from information management tasks, including data storage, searching, and matching (KPMG IT Advisory 2011).36

The information infrastructure of the IND, as outlined in Figure 5.4, can be characterized as a form of system-level bureaucracy (Bovens and Zouridis 2002), given that INDIGO places significant emphasis on information management and the automation of decision-making in the processing of digital dossiers.37 At the system level, the identification of applicants unfolds through automated data exchanges, where applicants are re-identified in processes to update their applications and dossiers. Re-identification also extends across multiple bureaucratic tiers, spanning the street- and screen-levels. At these levels, IND staff, both at the front and back offices, interact with the graphical interfaces of the information systems to confirm and verify applicant identities while processing their applications. The following section will examine how INDiGO utilizes the ELISE software for searching and matching applicant data across all these bureaucratic levels.

Figure 5.4: A schematic representation of the IND’s information infrastructure, illustrating its role in facilitating tasks related to application interactions, and inter-organizational collaboration with MC partners.

5.4.3 Applicant re-identification and matching with ELISE software in the INDiGO system

Throughout the evolution of INDiGO, the ELISE software for searching and matching applicants has been applied in various scenarios, which can be categorized into the following three cases. First, the ELISE software was initially used during the transition from the old IND information system (INDIS) to the new INDiGO. During a transition period when INDIS and INDiGO ran in parallel, the IND used the ELISE software to migrate legacy data by re-identifying matching applicant identities between the two systems.38 The ELISE software’s second and most prominent use is to facilitate applicant data searches. While the underlying case management system provides a “traditional” search, this was deemed insufficient because it would fail to return results when search criteria are too strict or contain errors. For this reason, the ELISE system was added to use the software’s fuzzy search algorithms to provide more advanced and reliable searching capabilities (Interview 2020-08-05). Third, the ELISE software searches the database for possible duplicate applicant data. The software attempts to match all recently created applicants to all other applicants in the database. Potential duplicate matches that meet specific criteria will then be flagged and investigated further. In all three uses, the software calculates match scores calculated by the software based on the likelihood that an applicant in the database meets the given search criteria.39

Following my analysis of the technical architecture and utilization of ELISE in INDiGO, as detailed in design and technical documents, I suggest categorizing the searching and matching into three essential components: query input, processing by the matching engine, and results output. Designed as a generic and decontextualized component within the INDiGO system architecture, ELISE is intended to function independently, receiving input from various sources. This input might originate from the INDiGO graphical user interface or other automated processes. According to the documentation, the system is designed with the recognition that both search queries and database records can contain errors. This design thus accommodates scenarios such as typos, the inadvertent swapping of first and last name fields, and similar errors, whether they are already present in a record within the database or introduced during the formulation of a search query. Within INDiGO, the search and match functionality operates without specific user distinctions, instead relying on the ELISE system as a data matching service configured universally and integrated into various components of the INDiGO system.40 This prompts the question: what implications do this absence of user-specificity have for the re-identification practices of the IND?

Per the system’s technical documentation, queries originating from IND end-user applications are channeled to the ELISE service, which employs diverse algorithms to compute matches. In practice, the data matching engine assesses the similarity between the input query and all database records, generating a corresponding similarity score. The computation of this match score can be adjusted through system configurations, allowing certain factors to be weighted more or less significantly. The matching process encompasses deterministic data matching algorithms that calculate similarity by considering variations in name spellings, utilizing methods such as name initials and even intelligently accounting for transposed numbers, evident in dates of birth or identification numbers. Additionally, the engine leverages name data databases to facilitate advanced matching techniques based on rule-based or domain knowledge, such as accommodating name transliterations and recognizing variations like “Aleksandra” and its diminutive form “Ola.” Furthermore, the system incorporates probabilistic matching mechanisms, including a feature termed affinity matrices, which involve attributes like the “soft matching” of birth years within a reasonable range. For example, if a search specifies a birth year as 1990, the system can be configured to consider birth years slightly earlier or later, covering a span like 1988 to 1992. By employing these diverse matching features, the system assigns a match score that gauges the likelihood of a match between the search query and the corresponding database entry. These system functionalities raise questions about the influence of ELISE on the re-identification expertise of IND personnel, potentially shifting the locus of expertise from street-level bureaucrats to the system.

The system returns a set of records ranked based on their closeness of match to the query, as opposed to offering a single match in response to a database lookup. By design, the data matching process always yields results, even if no exact match is found. The number of matches returned is also adjustable within the system. It is important to note that, due to its modular structure, the results are sent back to the point of origin of the search, such as being displayed through the INDiGO graphical user interfaces. Consequently, the searching and matching service has limited insight into where within the INDiGO system and process the call is initiated or who is making the query. Subsequent sections will explore how this user-agnostic approach aligns with the actual usage patterns for the IND’s re-identification practices.

5.4.4 Architectural and system design influences on applicant re-identification at the IND

This section has described architectural and system design elements of the IND composite information environment, and highlighted how they can influence the re-identification of applicants within the IND. The discussion has delineated two main dimensions that shape the IND’s data infrastructure and subsequently impact the re-identification process.

Firstly, operating as a node within the migration chain, the re-identification practices of the IND are not isolated but rather interconnected with the diverse partners comprising the migration chain. During the initial interaction with an applicant, the agency is tasked with validating whether the individual is already within the records of the migration chain. The v-number, functioning as a unique identifier for foreign nationals in the Netherlands, facilitates the process of re-identification and linkage of applicant data across the chain partners. However, re-identifying applicants will become considerably more intricate when this identifier is unavailable. The introduction of mechanisms like the PIL strives to establish standardized initial registrations to facilitate smoother re-identification in subsequent stages. In the following section, we will examine the practical aspects of these interactions and delve into the complexities of re-identification, including the interaction between different systems and the challenges faced by IND personnel.

Secondly, the IND’s strategies for re-identification are intertwined with the ELISE data matching system. This system acts as an intermediary in the re-identification of applicants, addressing various uncertainties surrounding identity data. Positioned as a loosely coupled module within the broader INDiGO system and its accompanying databases, ELISE aims to facilitate the process of applicant re-identification. The system’s design acknowledges the inherent uncertainties in search queries and database accuracy. In the upcoming section, we will delve into the empirical data gathered from fieldwork, elucidating three distinct types of data friction that can hinder re-identification, stemming from disparities between the intended designs of systems and their actual practical utilization.

5.5 Putting the design into practice: Investigating the practical application and challenges in the IND’s identification processes

5.5.2 Friction 2: Balancing precision and accuracy in the movement of identity data

This section, along with the next one, will center on three primary aspects of the search and matching process hypothesized to play a role in the successful re-identification of applicants: the formulation of search queries, the calculation of matches, and the handling of search results. This division aligns with the interview protocol’s structure, as elaborated in the section on data collection. The analysis will commence by exploring the challenges associated with formulating search queries. As emphasized in the design discussion, the absence of user-specificity in the search and match functionalities design means that these functions are intended to operate uniformly and generically for all users within the IND, without distinct configurations or adaptations tailored to specific user roles or preferences. This design choice might have implications for re-identifying applicants at the IND, as it raises questions about how effectively the system can cater to the diverse needs and practices of different users and departments involved in the re-identification process. The interview excerpts and scenarios presented in this and the subsequent sections are selected as they are deemed the most illustrative of potential data friction that could impede smooth re-identification when using the search and matching tools, as revealed through the analysis.

To start, it is essential to acknowledge that for respondents the lack of user-specificity is not a prominent concern, primarily due to the simplicity of formulating search queries. Participants underscored that the most fundamental and frequently utilized method for searching and re-identifying applicant data within the IND adopts distinct identifiers like the v-number. For instance, applicant re-identification might occur while processing new information related to an ongoing application in back-office settings or direct interactions with applicants at front-office counters. In these cases, the procedure is ongoing at the IND, and staff can thus execute such searches on the system relatively seamlessly, as the applicant is already known to the IND and the v-number can be employed.

However, as highlighted in the subsequent interview excerpts, IND staff members frequently encounter situations where formulating seemingly straightforward search queries becomes complex due to various factors. These scenarios often involve dealing with intricacies stemming from data inaccuracies, misunderstandings, and human errors, especially when data is received or input through diverse means like handwritten documents or phone conversations. In specific departments, such as those handling handwritten documents sent via postal services, employees often grapple with accurately deciphering and transcribing handwritten data, consequently introducing an element of uncertainty into the re-identification process. To illustrate, the following example from an interviewee sheds light on the challenges arising from processing handwritten application forms and managing errors or ambiguities in the provided date of birth:

But then you have to deal with perhaps unclear handwriting. Sometimes there is also an authorized person who fills in that information for them. Then it can, of course, be a human error and just a typo in a date of birth or something like that. (Interview with IND staff member, January 29, 2021)

Another instance of this issue involves the potential confusion between first and last names. IND personnel may encounter situations where the data provided by applicants does not clearly distinguish between these two components. This ambiguity can create uncertainty for the IND staff when using the search fields in INDiGO, as highlighted by the following interview excerpt:

It is also difficult to distinguish between first and last names with certain names. So you have to make slightly different combinations yourself: what can be a first name? What can be a last name? (Interview with IND staff member, November 10, 2020)

There are instances where the perceived match between applicants might originate from phonetic errors or other subtle variations. For instance, envision a situation where a name like “Rousseau” is misheard or misspelt as “Russo,” resulting in what the interviewee below refers to as a “phonetic slip”:

In many cases, you have the same applicant, but it is only based on a phonetic slip. But in a lot of cases, it’s also that it’s just not the same person. (Interview with IND staff member, January 29, 2021)

As previously outlined, the ELISE data matching system is intentionally designed to address such potential errors in search query inputs, aiming to account for possible typos, mixed names, and similar variations during matching. Nonetheless, when I inquired about this aspect during the interviews, there appeared to be a general lack of specific knowledge about how this mechanism functions for search query inputs. However, as noted in the following excerpt, the mechanism that accounts for search input could occasionally lead to confusion in the search results – a subject we will revisit in our subsequent discussion regarding the computation of matches and the handling of search results.

[I]f you search a certain way. […] I just don’t know exactly. There is an exact search on it, so if you make a typo there or write the name slightly differently, you will actually get what you expect in terms of results. [But] When you find the applicant afterwards, you think, hmm, why hadn’t it actually found it on that personal data I had tried first? (Interview with IND staff member, November 10, 2020)

The formulation of search queries can become more ambiguous and error-prone, as evidenced by the excerpts from IND staff members. These issues are particularly notable when dealing with phone calls or handwritten documents, where elements like v-numbers, dates of birth, or applicants’ names might contain typos or be challenging to read. In these contexts, data friction arises as identity data transitions between different material forms, arising from difficulties in comprehending or accurately transcribing information. Consequently, this can hinder the successful re-identification of an applicant. Interestingly, this challenge aligns with the design of the data matching system, which anticipates potential errors in search queries and compensates for such uncertainties. Here we can see how the data matching system thus functions as a mechanism to alleviate data friction as identity data shifts across various media. However, there is a potential disconnection between users’ expectations of search input accuracy and the system’s ability to accommodate errors and uncertainties in the query.